YOLOv3:Darknet代码解析(五)权重与特征存储 |

您所在的位置:网站首页 › yolov3 tiny make darknet layer › YOLOv3:Darknet代码解析(五)权重与特征存储 |

YOLOv3:Darknet代码解析(五)权重与特征存储

|

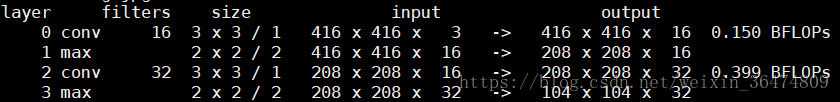

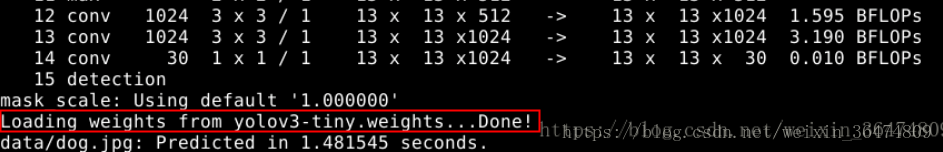

背景:我们去掉了两层卷积层,将网络结构变小。 目的:找到网络的权重如何读取与存储,找到网络中与卷积有关的运算。 相关文章: YOLOv3:Darknet代码解析(一)安装Darknet YOLOv3:Darknet代码解析(二)代码初步 YOLOv3:Darknet代码解析(三)卷积操作 YOLOv3:Darknet代码解析(四)结构更改与训练 YOLOv3:Darknet代码解析(五)权重与特征存储 YOLOv3:Darknet代码解析(六)简化的程序与卷积拆分 目录 1.创建卷积层与运行卷积层 1.1 make_convolutional_layer 1.1.1 层中参数的作用 1.2 forward_convolutional_layer函数 2.weight如何传入 2.1 与weight传入相关的函数 2.1.2 foward_convolutional_layer 2.1.3 parse_convolutional 2.2 load_weight 2.2.1 相关知识fopen 2.2.2 相关知识fread 2.2.3 相关知识ftell函数 2.2.4 相关知识rewind函数 3. Weight文件与存储大小 4. 程序的feature存储 4.1 net.workspace 4.2 forward_convolutional_layer 4.3 一个没有搞懂的语句 4.4 fill_cpu函数 5.权重与特征表 1.创建卷积层与运行卷积层分别为make_convolutional_layer和forward_convolutional_layer两个函数,一个创建卷积层,一个运行卷积层 //make_convolutional_layer convolutional_layer make_convolutional_layer(int batch, int h, int w, int c, int n, int groups, int size, int stride, int padding, ACTIVATION activation, int batch_normalize, int binary, int xnor, int adam) { int i; convolutional_layer l = {0}; l.type = CONVOLUTIONAL; l.groups = groups; l.h = h; l.w = w; l.c = c; l.n = n; l.binary = binary; l.xnor = xnor; l.batch = batch; l.stride = stride; l.size = size; l.pad = padding; l.batch_normalize = batch_normalize; l.weights = calloc(c/groups*n*size*size, sizeof(float)); l.weight_updates = calloc(c/groups*n*size*size, sizeof(float)); l.biases = calloc(n, sizeof(float)); l.bias_updates = calloc(n, sizeof(float)); l.nweights = c/groups*n*size*size; l.nbiases = n; float scale = sqrt(2./(size*size*c/l.groups)); for(i = 0; i < l.nweights; ++i) l.weights[i] = scale*rand_normal(); int out_w = convolutional_out_width(l); int out_h = convolutional_out_height(l); l.out_h = out_h; l.out_w = out_w; l.out_c = n; l.outputs = l.out_h * l.out_w * l.out_c; l.inputs = l.w * l.h * l.c; l.output = calloc(l.batch*l.outputs, sizeof(float)); l.delta = calloc(l.batch*l.outputs, sizeof(float)); l.forward = forward_convolutional_layer; l.backward = backward_convolutional_layer; l.update = update_convolutional_layer; if(batch_normalize){ l.scales = calloc(n, sizeof(float)); l.scale_updates = calloc(n, sizeof(float)); for(i = 0; i < n; ++i){ l.scales[i] = 1; } l.mean = calloc(n, sizeof(float)); l.variance = calloc(n, sizeof(float)); l.mean_delta = calloc(n, sizeof(float)); l.variance_delta = calloc(n, sizeof(float)); l.rolling_mean = calloc(n, sizeof(float)); l.rolling_variance = calloc(n, sizeof(float)); l.x = calloc(l.batch*l.outputs, sizeof(float)); l.x_norm = calloc(l.batch*l.outputs, sizeof(float)); } if(adam){ l.m = calloc(l.nweights, sizeof(float)); l.v = calloc(l.nweights, sizeof(float)); l.bias_m = calloc(n, sizeof(float)); l.scale_m = calloc(n, sizeof(float)); l.bias_v = calloc(n, sizeof(float)); l.scale_v = calloc(n, sizeof(float)); } l.workspace_size = get_workspace_size(l); l.activation = activation; fprintf(stderr, "conv %5d %2d x%2d /%2d %4d x%4d x%4d -> %4d x%4d x%4d %5.3f BFLOPs\n", n, size, size, stride, w, h, c, l.out_w, l.out_h, l.out_c, (2.0 * l.n * l.size*l.size*l.c/l.groups * l.out_h*l.out_w)/1000000000.); return l; } 1.1 make_convolutional_layer 1.1.1 层中参数的作用输出语句,结合相应的输出,知道每一个参量的意义。 fprintf(stderr, "conv %5d %2d x%2d /%2d %4d x%4d x%4d -> %4d x%4d x%4d %5.3f BFLOPs\n", n, size, size, stride, w, h, c, l.out_w, l.out_h, l.out_c, (2.0 * l.n * l.size*l.size*l.c/l.groups * l.out_h*l.out_w)/1000000000.); 参数含义: n滤波器的个数,size滤波器的大小,stride步长w输入的宽h输入的高c输入的层数l.out_w输出的宽l.out_h输出的高l.out_c输出的层数 1.2 forward_convolutional_layer函数 void forward_convolutional_layer(convolutional_layer l, network net) { int i, j; fill_cpu(l.outputs*l.batch, 0, l.output, 1); int m = l.n/l.groups; int k = l.size*l.size*l.c/l.groups; int n = l.out_w*l.out_h; for(i = 0; i < l.batch; ++i){ for(j = 0; j < l.groups; ++j){ float *a = l.weights + j*l.nweights/l.groups; float *b = net.workspace; float *c = l.output + (i*l.groups + j)*n*m; float *im = net.input + (i*l.groups + j)*l.c/l.groups*l.h*l.w; if (l.size == 1) { b = im; } else { im2col_cpu(im, l.c/l.groups, l.h, l.w, l.size, l.stride, l.pad, b); } gemm(0,0,m,n,k,1,a,k,b,n,1,c,n); } } if(l.batch_normalize){ forward_batchnorm_layer(l, net); } else { add_bias(l.output, l.biases, l.batch, l.n, l.out_h*l.out_w); } activate_array(l.output, l.outputs*l.batch, l.activation); if(l.binary || l.xnor) swap_binary(&l); }函数中gemm用来执行相应的卷积语句。a,b,c均为指针,分别指向weights,input,output fill_cpu的作用是将outputs置为0,以便后面的累加。 2.weight如何传入 2.1 与weight传入相关的函数 2.1.2 foward_convolutional_layer //forward_convolutional_layer int m = l.n/l.groups; int k = l.size*l.size*l.c/l.groups; int n = l.out_w*l.out_h; for(i = 0; i < l.batch; ++i){ for(j = 0; j < l.groups; ++j){ float *a = l.weights + j*l.nweights/l.groups; float *b = net.workspace; float *c = l.output + (i*l.groups + j)*n*m; float *im = net.input + (i*l.groups + j)*l.c/l.groups*l.h*l.w; if (l.size == 1) { b = im; } else { im2col_cpu(im, l.c/l.groups, l.h, l.w, l.size, l.stride, l.pad, b); } gemm(0,0,m,n,k,1,a,k,b,n,1,c,n); } }其中权重存在 float *a = l.weights + j*l.nweights/l.groups;其中,l.weights为存在结构体layer中的float指针。 //make_convolutional_layer函数 l.weights = calloc(c/groups*n*size*size, sizeof(float)); l.weight_updates = calloc(c/groups*n*size*size, sizeof(float));make_convolutional_layer函数值直接开辟了相应的weight指针,输入的大小为三维卷积核的大小(c*size*size)乘以卷积核的个数(n) yolo_v3中引入了分组计算,因此有一个group,下面的运算中,batch用于批量运算,groups用于把相应的bathc拆分成不同的组进行运算,我们可以将batch和group忽略。 我们需要找到在哪里送入权重值给l.weight指针。 2.1.3 parse_convolutional //parser.c parse_convolutional convolutional_layer parse_convolutional(list *options, size_params params) { int n = option_find_int(options, "filters",1); int size = option_find_int(options, "size",1); int stride = option_find_int(options, "stride",1); int pad = option_find_int_quiet(options, "pad",0); int padding = option_find_int_quiet(options, "padding",0); int groups = option_find_int_quiet(options, "groups", 1); if(pad) padding = size/2; char *activation_s = option_find_str(options, "activation", "logistic"); ACTIVATION activation = get_activation(activation_s); int batch,h,w,c; h = params.h; w = params.w; c = params.c; batch=params.batch; if(!(h && w && c)) error("Layer before convolutional layer must output image."); int batch_normalize = option_find_int_quiet(options, "batch_normalize", 0); int binary = option_find_int_quiet(options, "binary", 0); int xnor = option_find_int_quiet(options, "xnor", 0); convolutional_layer layer = make_convolutional_layer(batch,h,w,c,n,groups,size,stride,padding,activation, batch_normalize, binary, xnor, params.net->adam); layer.flipped = option_find_int_quiet(options, "flipped", 0); layer.dot = option_find_float_quiet(options, "dot", 0); return layer; }该函数根据输入的cfg文件给的参数创建了相应的卷积层,函数中运用make_convolutional函数来创建和开辟相应的权值存储的内存空间。依然没有给出权重的加载位置。真正加载权值的语句在load_weight这个函数中 2.2 load_weight //darknet.c main else if (0 == strcmp(argv[1], "detect")){ float thresh = find_float_arg(argc, argv, "-thresh", .5); char *filename = (argc > 4) ? argv[4]: 0; char *outfile = find_char_arg(argc, argv, "-out", 0); int fullscreen = find_arg(argc, argv, "-fullscreen"); test_detector("cfg/coco.data", argv[2], argv[3], filename, thresh, .5, outfile, fullscreen); //detector.c test_detector network *net = load_network(cfgfile, weightfile, 0); //netwokr.c load_network network *load_network(char *cfg, char *weights, int clear) { network *net = parse_network_cfg(cfg); if(weights && weights[0] != 0){ load_weights(net, weights); } if(clear) (*net->seen) = 0; return net; }从主函数中一层一层往内,找到了权重的核心语句为:load_weights //parser.c load_weights void load_weights(network *net, char *filename) { load_weights_upto(net, filename, 0, net->n); } //load_weights_upto void load_weights_upto(network *net, char *filename, int start, int cutoff) { fprintf(stderr, "Loading weights from %s...", filename); fflush(stdout); FILE *fp = fopen(filename, "rb"); if(!fp) file_error(filename); int major; int minor; int revision; fread(&major, sizeof(int), 1, fp); fread(&minor, sizeof(int), 1, fp); fread(&revision, sizeof(int), 1, fp); if ((major*10 + minor) >= 2 && major < 1000 && minor < 1000){ fread(net->seen, sizeof(size_t), 1, fp); } else { int iseen = 0; fread(&iseen, sizeof(int), 1, fp); *net->seen = iseen; } int transpose = (major > 1000) || (minor > 1000); int i; for(i = start; i < net->n && i < cutoff; ++i){ layer l = net->layers[i]; if (l.dontload) continue; if(l.type == CONVOLUTIONAL || l.type == DECONVOLUTIONAL){ load_convolutional_weights(l, fp); } } } if(l.type == LOCAL){ int locations = l.out_w*l.out_h; int size = l.size*l.size*l.c*l.n*locations; fread(l.biases, sizeof(float), l.outputs, fp); fread(l.weights, sizeof(float), size, fp); } } fprintf(stderr, "Done!\n"); fclose(fp); }该函数从weight文件中,运用指针fp指向权重文件,根据network中每层的类型,一层一层加载权重。 fopen : https://baike.baidu.com/item/fopen/10942321?fr=aladdin fread : https://baike.baidu.com/item/fread/10942353?fr=aladdin 函数原型:FILE * fopen(const char * path, const char * mode); 返回值:文件顺利打开后,指向该流的文件指针就会被返回。如果文件打开失败则返回 NULL,并把错误代码存在error中。 FILE *fp = fopen(filename, "rb");代码中如上,b表示二进制文件,r表示只读,r+表示可读写,rb表示以只读格式打开二进制文件。 2.2.2 相关知识fread函数原型:size_t fread ( void *buffer, size_t size, size_t count, FILE *stream) ; buffer:用于接收数据的内存地址size:要读的每个数据项的字节数,单位是字节count:要读count个数据项,每个数据项size个字节.stream:输入流 返回值:需要读取的项数,小于或者等于count。 fread(l.biases, sizeof(float), l.n, fp); if (l.batch_normalize && (!l.dontloadscales)){ fread(l.scales, sizeof(float), l.n, fp); fread(l.rolling_mean, sizeof(float), l.n, fp); fread(l.rolling_variance, sizeof(float), l.n, fp); } fread(l.weights, sizeof(float), num, fp);代码中如上,先读biases,n个卷积核有n个biasies,然后读入weight的个数num=l.nweights 2.2.3 相关知识ftell函数ftell函数用以获得文件当前位置指针的位置,函数给出当前位置指针相对于文件开头的字节数.如; long t; t=ftell(pf); 当函数调用出错时,函数返回-1L. 我们可以通过以下方式来测试一个文件的长度: fseek(fp,0L,SEEK_END); t=ftell(fp); 2.2.4 相关知识rewind函数调用形式为:rewind(pf); 函数没有返回值.函数的功能是使文件的位置指针回到文件的开头. 3. Weight文件与存储大小我们在load_weights_upto和load_convolutional_weights函数中加上输出。 Loading weights from obj_9000.weights... Loading weights for conv layer 0... Weight file location before load weights 16... biasis num:16,Weights num:432... Batch normalize and loadscales,rolling_means and variance:48... Weight file location after load weights 2000... Loading weights for conv layer 2... Weight file location before load weights 2000... biasis num:32,Weights num:4608... Batch normalize and loadscales,rolling_means and variance:96... Weight file location after load weights 20944... Loading weights for conv layer 4... Weight file location before load weights 20944... biasis num:64,Weights num:18432... Batch normalize and loadscales,rolling_means and variance:192... Weight file location after load weights 95696... Loading weights for conv layer 6... Weight file location before load weights 95696... biasis num:128,Weights num:73728... Batch normalize and loadscales,rolling_means and variance:384... Weight file location after load weights 392656... Loading weights for conv layer 8... Weight file location before load weights 392656... biasis num:256,Weights num:294912... Batch normalize and loadscales,rolling_means and variance:768... Weight file location after load weights 1576400... Loading weights for conv layer 10... Weight file location before load weights 1576400... biasis num:512,Weights num:1179648... Batch normalize and loadscales,rolling_means and variance:1536... Weight file location after load weights 6303184... Loading weights for conv layer 12... Weight file location before load weights 6303184... biasis num:1024,Weights num:4718592... Batch normalize and loadscales,rolling_means and variance:3072... Weight file location after load weights 6364744... Loading weights for conv layer 13... Weight file location before load weights 6364744... biasis num:1024,Weights num:9437184... Batch normalize and loadscales,rolling_means and variance:3072... Weight file location after load weights 6364744... Loading weights for conv layer 14... Weight file location before load weights 6364744... biasis num:30,Weights num:30720... Weight file location after load weights 6364744... Done! 4. 程序的feature存储观察程序发现feature存储在workspace中,其值为一个指针。 network *parse_network_cfg(char *filename) { list *sections = read_cfg(filename); node *n = sections->front; if(!n) error("Config file has no sections"); network *net = make_network(sections->size - 1); net->gpu_index = gpu_index; size_params params; section *s = (section *)n->val; list *options = s->options; if(!is_network(s)) error("First section must be [net] or [network]"); parse_net_options(options, net); params.h = net->h; params.w = net->w; params.c = net->c; params.inputs = net->inputs; params.batch = net->batch; params.time_steps = net->time_steps; params.net = net; size_t workspace_size = 0; n = n->next; int count = 0; free_section(s); fprintf(stderr, "layer filters size input output\n"); while(n){ params.index = count; fprintf(stderr, "%5d ", count); s = (section *)n->val; options = s->options; layer l = {0}; LAYER_TYPE lt = string_to_layer_type(s->type); if(lt == CONVOLUTIONAL){ l = parse_convolutional(options, params); }else if(lt == DECONVOLUTIONAL){ l = parse_deconvolutional(options, params); }else if(lt == LOCAL){ l = parse_local(options, params); }else if(lt == ACTIVE){ l = parse_activation(options, params); }else if(lt == LOGXENT){ l = parse_logistic(options, params); }else if(lt == L2NORM){ l = parse_l2norm(options, params); }else if(lt == RNN){ l = parse_rnn(options, params); }else if(lt == GRU){ l = parse_gru(options, params); }else if (lt == LSTM) { l = parse_lstm(options, params); }else if(lt == CRNN){ l = parse_crnn(options, params); }else if(lt == CONNECTED){ l = parse_connected(options, params); }else if(lt == CROP){ l = parse_crop(options, params); }else if(lt == COST){ l = parse_cost(options, params); }else if(lt == REGION){ l = parse_region(options, params); }else if(lt == YOLO){ l = parse_yolo(options, params); }else if(lt == DETECTION){ l = parse_detection(options, params); }else if(lt == SOFTMAX){ l = parse_softmax(options, params); net->hierarchy = l.softmax_tree; }else if(lt == NORMALIZATION){ l = parse_normalization(options, params); }else if(lt == BATCHNORM){ l = parse_batchnorm(options, params); }else if(lt == MAXPOOL){ l = parse_maxpool(options, params); }else if(lt == REORG){ l = parse_reorg(options, params); }else if(lt == AVGPOOL){ l = parse_avgpool(options, params); }else if(lt == ROUTE){ l = parse_route(options, params, net); }else if(lt == UPSAMPLE){ l = parse_upsample(options, params, net); }else if(lt == SHORTCUT){ l = parse_shortcut(options, params, net); }else if(lt == DROPOUT){ l = parse_dropout(options, params); l.output = net->layers[count-1].output; l.delta = net->layers[count-1].delta; }else{ fprintf(stderr, "Type not recognized: %s\n", s->type); } l.clip = net->clip; l.truth = option_find_int_quiet(options, "truth", 0); l.onlyforward = option_find_int_quiet(options, "onlyforward", 0); l.stopbackward = option_find_int_quiet(options, "stopbackward", 0); l.dontsave = option_find_int_quiet(options, "dontsave", 0); l.dontload = option_find_int_quiet(options, "dontload", 0); l.dontloadscales = option_find_int_quiet(options, "dontloadscales", 0); l.learning_rate_scale = option_find_float_quiet(options, "learning_rate", 1); l.smooth = option_find_float_quiet(options, "smooth", 0); option_unused(options); net->layers[count] = l; if (l.workspace_size > workspace_size) workspace_size = l.workspace_size; free_section(s); n = n->next; ++count; if(n){ params.h = l.out_h; params.w = l.out_w; params.c = l.out_c; params.inputs = l.outputs; } } free_list(sections); layer out = get_network_output_layer(net); net->outputs = out.outputs; net->truths = out.outputs; if(net->layers[net->n-1].truths) net->truths = net->layers[net->n-1].truths; net->output = out.output; net->input = calloc(net->inputs*net->batch, sizeof(float)); net->truth = calloc(net->truths*net->batch, sizeof(float)); #ifdef GPU net->output_gpu = out.output_gpu; net->input_gpu = cuda_make_array(net->input, net->inputs*net->batch); net->truth_gpu = cuda_make_array(net->truth, net->truths*net->batch); #endif if(workspace_size){ //printf("%ld\n", workspace_size); #ifdef GPU if(gpu_index >= 0){ net->workspace = cuda_make_array(0, (workspace_size-1)/sizeof(float)+1); }else { net->workspace = calloc(1, workspace_size); } #else net->workspace = calloc(1, workspace_size); fprintf(stderr, "Network parse Done! network WorkSpace_size:%d\n",workspace_size); #endif } return net; }parse_network_cfg中把网络的workspace_size定义为每层中需要的workspace_size的最大值。 4.1 net.workspaceparse_convolutional文件是根据cfg文件找到适宜的参数传给make_convolutional_layer,然后具体的层的开辟需要make_convolutional函数来运行。forward_convolutional_layer是运行具体的层的运算。其中,feature存在net.workspace中 for(i = 0; i < l.batch; ++i){ for(j = 0; j < l.groups; ++j){ float *a = l.weights + j*l.nweights/l.groups; float *b = net.workspace; float *c = l.output + (i*l.groups + j)*n*m; float *im = net.input + (i*l.groups + j)*l.c/l.groups*l.h*l.w; if (l.size == 1) { b = im; } else { im2col_cpu(im, l.c/l.groups, l.h, l.w, l.size, l.stride, l.pad, b); } gemm(0,0,m,n,k,1,a,k,b,n,1,c,n); } }在make_convolutional_layer中,workspace_size为该层的size l.workspace_size = get_workspace_size(l); 4.2 forward_convolutional_layer此函数运用指针进行卷积运算,其中,featuremap就为指针net.workspace,我们需要找到哪里调用这个函数,从而找到哪里调用net.workspace的值。 //detector.c test_detector void test_detector(char *datacfg, char *cfgfile, char *weightfile, char *filename, float thresh, float hier_thresh, char *outfile, int fullscreen) { list *options = read_data_cfg(datacfg); char *name_list = option_find_str(options, "names", "data/names.list"); char **names = get_labels(name_list); image **alphabet = load_alphabet(); network *net = load_network(cfgfile, weightfile, 0); set_batch_network(net, 1); srand(2222222); double time; char buff[256]; char *input = buff; float nms=.45; while(1){ if(filename){ strncpy(input, filename, 256); } else { printf("Enter Image Path: "); fflush(stdout); input = fgets(input, 256, stdin); if(!input) return; strtok(input, "\n"); } image im = load_image_color(input,0,0); image sized = letterbox_image(im, net->w, net->h); layer l = net->layers[net->n-1]; float *X = sized.data; time=what_time_is_it_now(); network_predict(net, X); printf("%s: Predicted in %f seconds.\n", input, what_time_is_it_now()-time); int nboxes = 0; detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh, 0, 1, &nboxes); if (nms) do_nms_sort(dets, nboxes, l.classes, nms); draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes); free_detections(dets, nboxes); if(outfile){ save_image(im, outfile); } else{ save_image(im, "predictions"); } free_image(im); free_image(sized); if (filename) break; } }该函数先创建network,然后读取图片,然后把图片送入network_predict函数中。 // network.c float *network_predict(network *net, float *input) { network orig = *net; net->input = input; net->truth = 0; net->train = 0; net->delta = 0; forward_network(net); float *out = net->output; *net = orig; return out; } void forward_network(network *netp) { network net = *netp; int i; for(i = 0; i < net.n; ++i){ net.index = i; layer l = net.layers[i]; if(l.delta){ fill_cpu(l.outputs * l.batch, 0, l.delta, 1); } l.forward(l, net); net.input = l.output; if(l.truth) { net.truth = l.output; } } calc_network_cost(netp); } 4.3 一个没有搞懂的语句 //darknet.h中定义的 struct layer{ void (*forward) (struct layer, struct network); } //network.c中forward_network函数中 l.forward(l, net); 4.4 fill_cpu函数 5.权重与特征表此表为我们更改结构之后的权重与存储表。 gpu@gpu-SYS-7048GR-TR:/1t_mechan_disk/gpudata/datasets/xxr/yolov3_dbg$ ./darknet detect head-hw-v2.cfg obj_9000.weights data/dog.jpg layer filters size input output 0 conv 16 3 x 3 / 1 416 x 416 x 3 -> 416 x 416 x 16 0.150 BFLOPs Layer size: 18690048 1 max 2 x 2 / 2 416 x 416 x 16 -> 208 x 208 x 16 2 conv 32 3 x 3 / 1 208 x 208 x 16 -> 208 x 208 x 32 0.399 BFLOPs Layer size: 24920064 3 max 2 x 2 / 2 208 x 208 x 32 -> 104 x 104 x 32 4 conv 64 3 x 3 / 1 104 x 104 x 32 -> 104 x 104 x 64 0.399 BFLOPs Layer size: 12460032 5 max 2 x 2 / 2 104 x 104 x 64 -> 52 x 52 x 64 6 conv 128 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 128 0.399 BFLOPs Layer size: 6230016 7 max 2 x 2 / 2 52 x 52 x 128 -> 26 x 26 x 128 8 conv 256 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 256 0.399 BFLOPs Layer size: 3115008 9 max 2 x 2 / 2 26 x 26 x 256 -> 13 x 13 x 256 10 conv 512 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 512 0.399 BFLOPs Layer size: 1557504 11 max 2 x 2 / 1 13 x 13 x 512 -> 13 x 13 x 512 12 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs Layer size: 3115008 13 conv 1024 3 x 3 / 1 13 x 13 x1024 -> 13 x 13 x1024 3.190 BFLOPs Layer size: 6230016 14 conv 30 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 30 0.010 BFLOPs Layer size: 692224 15 detection mask_scale: Using default '1.000000' Network workspace 24920064 Loading weights from obj_9000.weights... Loading weights for conv layer 0... Weight file location before load weights 16... biasis num:16,Weights num:432... Batch normalize and loadscales,rolling_means and variance:48... Weight file location after load weights 2000... Loading weights for conv layer 2... Weight file location before load weights 2000... biasis num:32,Weights num:4608... Batch normalize and loadscales,rolling_means and variance:96... Weight file location after load weights 20944... Loading weights for conv layer 4... Weight file location before load weights 20944... biasis num:64,Weights num:18432... Batch normalize and loadscales,rolling_means and variance:192... Weight file location after load weights 95696... Loading weights for conv layer 6... Weight file location before load weights 95696... biasis num:128,Weights num:73728... Batch normalize and loadscales,rolling_means and variance:384... Weight file location after load weights 392656... Loading weights for conv layer 8... Weight file location before load weights 392656... biasis num:256,Weights num:294912... Batch normalize and loadscales,rolling_means and variance:768... Weight file location after load weights 1576400... Loading weights for conv layer 10... Weight file location before load weights 1576400... biasis num:512,Weights num:1179648... Batch normalize and loadscales,rolling_means and variance:1536... Weight file location after load weights 6303184... Loading weights for conv layer 12... Weight file location before load weights 6303184... biasis num:1024,Weights num:4718592... Batch normalize and loadscales,rolling_means and variance:3072... Weight file location after load weights 6364744... Loading weights for conv layer 13... Weight file location before load weights 6364744... biasis num:1024,Weights num:9437184... Batch normalize and loadscales,rolling_means and variance:3072... Weight file location after load weights 6364744... Loading weights for conv layer 14... Weight file location before load weights 6364744... biasis num:30,Weights num:30720... Weight file location after load weights 6364744... Done! M:16,N:173056,K:27 gemm_nn:A[432],B[4672512],C[2768896] M:32,N:43264,K:144 gemm_nn:A[4608],B[6230016],C[1384448] M:64,N:10816,K:288 gemm_nn:A[18432],B[3115008],C[692224] M:128,N:2704,K:576 gemm_nn:A[73728],B[1557504],C[346112] M:256,N:676,K:1152 gemm_nn:A[294912],B[778752],C[173056] M:512,N:169,K:2304 gemm_nn:A[1179648],B[389376],C[86528] M:1024,N:169,K:4608 gemm_nn:A[4718592],B[778752],C[173056] M:1024,N:169,K:9216 gemm_nn:A[9437184],B[1557504],C[173056] M:30,N:169,K:1024 gemm_nn:A[30720],B[173056],C[5070] data/dog.jpg: Predicted in 1.437287 seconds.

相关文章: YOLOv3:Darknet代码解析(一)安装Darknet YOLOv3:Darknet代码解析(二)代码初步 YOLOv3:Darknet代码解析(三)卷积操作 YOLOv3:Darknet代码解析(四)结构更改与训练 YOLOv3:Darknet代码解析(五)权重与特征存储 YOLOv3:Darknet代码解析(六)简化的程序与卷积拆分 |

【本文地址】